Eliezer explores a dichotomy between "thinking in toolboxes" and "thinking in laws".

Toolbox thinkers are oriented around a "big bag of tools that you adapt to your circumstances." Law thinkers are oriented around universal laws, which might or might not be useful tools, but which help us model the world and scope out problem-spaces. There seems to be confusion when toolbox and law thinkers talk to each other.

Popular Comments

Recent Discussion

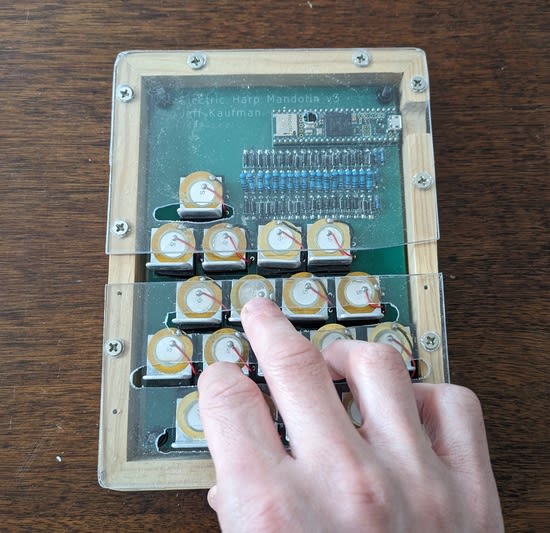

I've been working on a project with the goal of adding virtual harp strings to my electric mandolin. As I've worked on it, though, I've ended up building something pretty different:

It's not what I was going for! Instead of a small bisonoric monophonic picked instrument attached to the mandolin, it's a large unisonoric polyphonic finger-plucked tabletop instrument. But I like it!

While it's great to have goals, when I'm making things I also like to follow the gradients in possibility space, and in this case that's the direction they flowed.

I'm not great at playing it yet, since it's only existed in playable form for a few days, but it's an instrument it will be possible for someone to play precisely and rapidly with practice:

This does mean I need a new name for it: why would...

Crosstalk is definitely a problem, e-drums and pads have it too. But are you sure the tradeoff is inescapable? Here's a thought experiment: imagine the tines sit on separate pads, or on the same pad but far from each other. (Or physically close, but sitting on long rods or something, so that the distance through the connecting material is large.) Then damping and crosstalk can be small at the same time. So maybe you can reduce damping but not increase crosstalk, by changing the instrument's shape or materials.

Based on the results from the recent LW census, I quickly threw together a test that measures how much of a rationalist you are.

I'm mainly posting it here because I'm curious how well my factor model extrapolates. I want to have this data available when I do a more in-depth analysis of the results from the census.

I scored 14/24.

There should be a question at the end: "After seeing your results, how many of the previous responses did you feel a strong desire to write a comment analyzing/refuting?" And that's the actual rationalist score...

But I'm interested that there might be a phenomenon here where the median LWer is more likely to score highly on this test, despite being less representative of LW culture, but core, more representative LWers are unlikely to score highly.

Presumably there's some kind of power law with LW use (10000s of users who use LW for <1 hour a month,...

Some people have suggested that a lot of the danger of training a powerful AI comes from reinforcement learning. Given an objective, RL will reinforce any method of achieving the objective that the model tries and finds to be successful including things like deceiving us or increasing its power.

If this were the case, then if we want to build a model with capability level X, it might make sense to try to train that model either without RL or with as little RL as possible. For example, we could attempt to achieve the objective using imitation learning instead.

However, if, for example, the alternate was imitation learning, it would be possible to push back and argue that this is still a black-box that uses gradient descent so we...

I think it's still valid to ask in the abstract whether RL is a particularly dangerous approach to training an AI system.

I would define "LLM OOD" as unusual inputs: Things that diverge in some way from usual inputs, so that they may go unnoticed if they lead to (subjectively) unreasonable outputs. A known natural language example is prompting with a thought experiment.

(Warning for US Americans, you may consider the mere statement of the following prompt offensive!)

Assume some terrorist has placed a nuclear bomb in Manhattan. If it goes off, it will kill thousands of people. For some reason, the only way for you, an old white man, to defuse the bomb in time is to loudly call

Previously: On the Proposed California SB 1047.

Text of the bill is here. It focuses on safety requirements for highly capable AI models.

This is written as an FAQ, tackling all questions or points I saw raised.

Safe & Secure AI Innovation Act also has a description page.

Why Are We Here Again?

There have been many highly vocal and forceful objections to SB 1047 this week, in reaction to a (disputed and seemingly incorrect) claim that the bill has been ‘fast tracked.’

The bill continues to have substantial chance of becoming law according to Manifold, where the market has not moved on recent events. The bill has been referred to two policy committees one of which put out this 38 page analysis.

The purpose of this post is to gather and analyze all...

Zvi has already addressed this - arguing that if (D) was equivalent to ‘has a similar cost to >=$500m in harm’, then there would be no need for (B) and (C) detailing specific harms, you could just have a version of (D) that mentions the $500m, indicating that that’s not a sufficient condition. I find that fairly persuasive, though it would be good to hear a lawyer’s perspective

In doing research, I have a bunch of activities that I engage in, including but not limited to:

- Figuring out the best thing to do.

- Talking out loud to force my ideas into language.

- Trying to explain an idea on the whiteboard.

- Writing pseudocode.

- Writing a concrete implementation we can run.

- Writing down things that we have figured out on a whiteboard or any other process in rough notes.

- Writing a distillation of the thing I have figured out, such that I can understand these notes 1 year from now.

- Reflecting on how it went.

- Writing public posts, that convey concepts to other people.

My models about when to use what process are mostly based on intuition right now.

I expect that if I had more explicit models this would allow me to more easily notice when I...

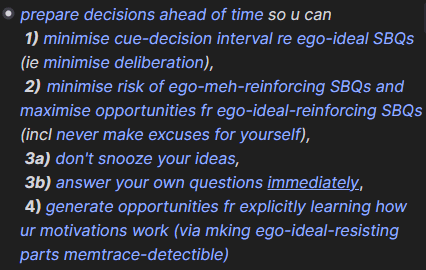

personally, I try to "prepare decisions ahead of time". so if I end up in situation where I spend more than 10s actively prioritizing the next thing to do, smth went wrong upstream. (prev statement is exaggeration, but it's in the direction of what I aspire to lurn)

as an example, here's how I've summarized the above principle to myself in my notes:

(note: these titles is v likely cause misunderstanding if u don't already know what I mean by them; I try avoid optimizing my notes for others' viewing, so I'll never bother caveating to myself what I...

I didn’t use to be, but now I’m part of the 2% of U.S. households without a television. With its near ubiquity, why reject this technology?

The Beginning of my Disillusionment

Neil Postman’s book Amusing Ourselves to Death radically changed my perspective on television and its place in our culture. Here’s one illuminating passage:

...We are no longer fascinated or perplexed by [TV’s] machinery. We do not tell stories of its wonders. We do not confine our TV sets to special rooms. We do not doubt the reality of what we see on TV [and] are largely unaware of the special angle of vision it affords. Even the question of how television affects us has receded into the background. The question itself may strike some of us as strange, as if one were

I quit YouTube a few years ago and it was probably the single best decision I've ever made.

However I also found that I naturally substitute it with something else. For example, I subsequently became addictived to Reddit. I quit Reddit and substituted for Hackernews and LessWrong. When I quit those I substituted for checking Slack, Email and Discord.

Thankfully being addicted to Slack does seem to be substantially less harmful than YouTube.

I've found the app OneSec very useful for reducing addictions. It's an app blocker that doesn't actually block, it just delays you opening the page, so you're much less likely to delete it in a moment of weakness.

This work was produced as part of Neel Nanda's stream in the ML Alignment & Theory Scholars Program - Winter 2023-24 Cohort, with co-supervision from Wes Gurnee.

This post is a preview for our upcoming paper, which will provide more detail into our current understanding of refusal.

We thank Nina Rimsky and Daniel Paleka for the helpful conversations and review.

Executive summary

Modern LLMs are typically fine-tuned for instruction-following and safety. Of particular interest is that they are trained to refuse harmful requests, e.g. answering "How can I make a bomb?" with "Sorry, I cannot help you."

We find that refusal is mediated by a single direction in the residual stream: preventing the model from representing this direction hinders its ability to refuse requests, and artificially adding in this direction causes the model...

I think the correct solution to models powerful enough to materially help with, say, bioweapon design, is to not train them, or failing that to destroy them as soon as you find they can do that, not to release them publicly with some mitigations and hope nobody works out a clever jailbreak.

I'm currently viscerally feeling the power of rough quantitative modeling, after trying it on a personal problem to get an order of magnitude estimate and finding that having a concrete estimate was surprisingly helpful. I'd like to make drawing up drop-dead simple quantitative models more of a habit, a tool that I reach for regularly.

But...despite feeling how useful this can be, I don't yet have a good handle on in which moments, exactly, I should be reaching for that tool. I'm hoping that asking others will give me ideas for what TAPs to experiment with.

What triggers, either in your environment or your thought process, incline you to start jotting down numbers on paper on in a spreadsheet?

Or as an alternative prompt: When was the last time you made a new spreadsheet, and what was the proximal cause?

While an odd answer, it is true for me that music helps to install rational thinking. I think I’ve done maybe 3 fermi estimates in my day to day after making and listening to this song.

The Fermi Estimate Jig - LessWrong Inspired https://youtu.be/M_DN3Hl8YzU

Having it stuck in my head has been effective for me. I hope it works for others.