One winter a grasshopper, starving and frail, approaches a colony of ants drying out their grain in the sun to ask for food, having spent the summer singing and dancing.

Then, various things happen.

Popular Comments

Recent Discussion

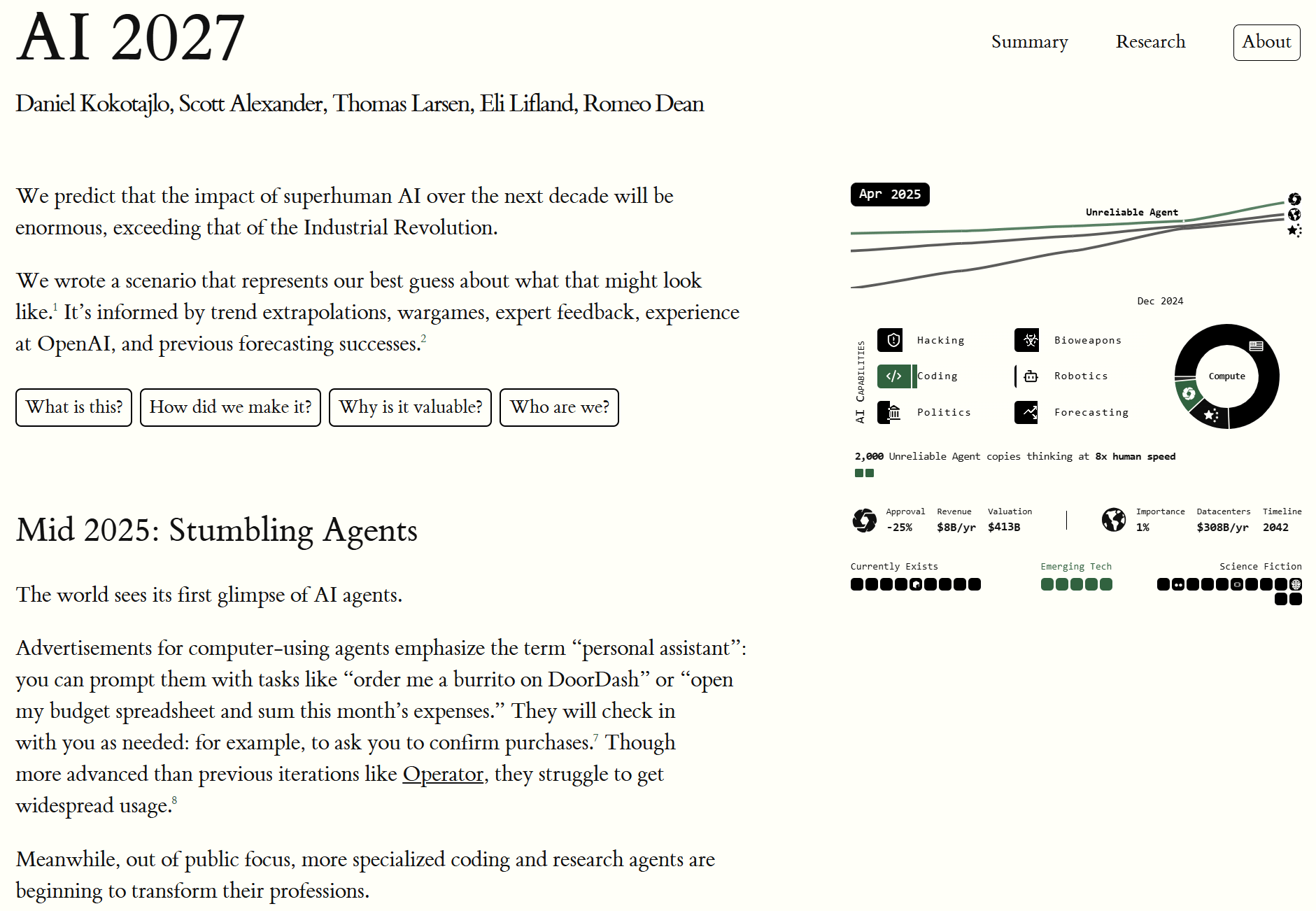

In 2021 I wrote what became my most popular blog post: What 2026 Looks Like. I intended to keep writing predictions all the way to AGI and beyond, but chickened out and just published up till 2026.

Well, it's finally time. I'm back, and this time I have a team with me: the AI Futures Project. We've written a concrete scenario of what we think the future of AI will look like. We are highly uncertain, of course, but we hope this story will rhyme with reality enough to help us all prepare for what's ahead.

You really should go read it on the website instead of here, it's much better. There's a sliding dashboard that updates the stats as you scroll through the scenario!

But I've nevertheless copied the...

Recently, Nathan Young and I wrote about arguments for AI risk and put them on the AI Impacts wiki. In the process, we ran a casual little survey of the American public regarding how they feel about the arguments, initially (if I recall) just because we were curious whether the arguments we found least compelling would also fail to compel a wide variety of people.

The results were very confusing, so we ended up thinking more about this than initially intended and running four iterations total. This is still a small and scrappy poll to satisfy our own understanding, and doesn’t involve careful analysis or error checking. But I’d like to share a few interesting things we found. Perhaps someone else wants to look at our data more...

Metroid Prime would work well as a difficult video-game-based test for AI generality.

- It has a mixture of puzzles, exploration, and action.

- It takes place in a 3D environment.

- It frequently involves backtracking across large portions of the map, so it requires planning ahead.

- There are various pieces of text you come across during the game. Some of them are descriptions of enemies' weaknesses or clues on how to solve puzzles, but most of them are flavor text with no mechanical significance.

- The player occasionally unlocks new abilities they have to learn how to

Things are getting scary with the Trump regime. Rule of law is breaking down with regard to immigration enforcement and basic human rights are not being honored.

I'm kind of dumbfounded because this is worse than I expected things to get. Do any of you LessWrongers have a sense of whether these stories are exaggerated or if they can be taken at face value?

Deporting immigrants is nothing new, but I don't think previous administrations have committed these sorts of human rights violations and due process violations.

Krome detention center in Miami ...

Summary:

When stateless LLMs are given memories they will accumulate new beliefs and behaviors, and that may allow their effective alignment to evolve. (Here "memory" is learning during deployment that is persistent beyond a single session.)[1]

LLM agents will have memory: Humans who can't learn new things ("dense anterograde amnesia") are not highly employable for knowledge work. LLM agents that can learn during deployment seem poised to have a large economic advantage. Limited memory systems for agents already exist, so we should expect nontrivial memory abilities improving alongside other capabilities of LLM agents.

Memory changes alignment: It is highly useful to have an agent that can solve novel problems and remember the solutions. Such memory includes useful skills and beliefs like "TPS reports should be filed in the folder ./Reports/TPS"....

I think the more generous way to think about it is that current prosaic alignment efforts are useful for aligning future systems, but there's a gap they probably don't cover.

Learning agents like I'm describing still have an LLM at their heart, so aligning that LLM is still important. Things like RLHF, RLAIF, deliberative alignment, steering vectors, fine tuning, etc. are all relevant. And the other not-strictly-alignment parts of prosaic alignment like mechanistic interpretability, behavioral tests for alignment, capabilities testing, control, etc. remain ...

An aspect where I expect further work to pay off is stuff related to self-visualization, which is fairly powerful (e.g. visualizing yourself doing something for 10 hours will generally go a really long way to getting you there, and for the 10 hour thing it's more a question of what to do when something goes wrong enough to make the actul events sufficiently different from what you imagined, and how to do it in less than 10 hours).

The quarter inch jack (" phone connector") is probably the oldest connector still in use today, and it's picked up a very wide range of applications. Which also means it's a huge mess in a live sound context, where a 1/4" jack could be any of:

Unbalanced or balanced line level (~1V). Ex: a mixer to a powered speaker.

Unbalanced instrument level (~200mV), high impedance. Ex: electric guitar.

Unbalanced piezo level (~50mV), high impedance. Ex: contact pickup on a fiddle.

Unbalanced speaker level (~30V). Ex: powered amplifier to passive speaker.

Stereo line level (2x ~1V). Ex: output of keyboard.

Stereo headphone level (2x ~3v). Ex: headphone jack.

Send and return line level (~2x 1V). Ex: input to and output from an external compressor.

Switch (non-audio). Ex: damper pedal on a keyboard, which would be normally open or normally closed.

1V per octave

We study alignment audits—systematic investigations into whether an AI is pursuing hidden objectives—by training a model with a hidden misaligned objective and asking teams of blinded researchers to investigate it.

This paper was a collaboration between the Anthropic Alignment Science and Interpretability teams.

Abstract

We study the feasibility of conducting alignment audits: investigations into whether models have undesired objectives. As a testbed, we train a language model with a hidden objective. Our training pipeline first teaches the model about exploitable errors in RLHF reward models (RMs), then trains the model to exploit some of these errors. We verify via out-of-distribution evaluations that the model generalizes to exhibit whatever behaviors it believes RMs rate highly, including ones not reinforced during training. We leverage this model to study alignment audits in two...

Thank you, this is great work. I filled out the external researcher interest form but was not selected for Team 4.

I'm not sure that Team 4 were on par with what professional jailbreakers could achieve in this setting. I look forward to follow up experiments. This is bottlenecked by the absence of an open source implementation of auditing games. I went over the paper with a colleague. Unfortunately we don't have bandwidth to replicate this work ourselves. Is there a way to sign up to be notified once a playable auditing game is available?

I'd also be e...

“In the loveliest town of all, where the houses were white and high and the elms trees were green and higher than the houses, where the front yards were wide and pleasant and the back yards were bushy and worth finding out about, where the streets sloped down to the stream and the stream flowed quietly under the bridge, where the lawns ended in orchards and the orchards ended in fields and the fields ended in pastures and the pastures climbed the hill and disappeared over the top toward the wonderful wide sky, in this loveliest of all towns Stuart stopped to get a drink of sarsaparilla.”

— 107-word sentence from Stuart Little (1945)

Sentence lengths have declined. The average sentence length was 49 for Chaucer (died 1400), 50...

I quite like the article The Rise and Fall of the English Sentence, which partially attributes reduced structural complexity to increase in noun compounds (like "state hate crime victim numbers" rather than "the numbers of victims who have experienced crimes that were motivated by hatred directed at their ethnic or racial identity, and who have reported these crimes to the state"))