Quick Takes

I think some of the AI safety policy community has over-indexed on the visual model of the "Overton Window" and under-indexed on alternatives like the "ratchet effect," "poisoning the well," "clown attacks," and other models where proposing radical changes can make you, your allies, and your ideas look unreasonable (edit to add: whereas successfully proposing minor changes achieves hard-to-reverse progress, making ideal policy look more reasonable).

I'm not familiar with a lot of systematic empirical evidence on either side, but it seems to me like the more...

I'm not a decel, but the way this stuff often is resolved is that there are crazy people that aren't taken seriously by the managerial class but that are very loud and make obnoxious asks. Think the evangelicals against abortion or the Columbia protestors.

Then there is some elite, part of the managerial class, that makes reasonable policy claims. For Abortion, this is Mitch McConnel, being disciplined over a long period of time in choosing the correct judges. For Palestine, this is Blinken and his State Department bureaucracy.

The problem with d...

The FDC just fined US phone carriers for sharing the location data of US customers to anyone willing to buy them. The fines don't seem to be high enough to deter this kind of behavior.

That likely includes either directly or indirectly the Chinese government.

What does the US Congress do to protect spying by China? Of course, banning tik tok instead of actually protecting the data of US citizens.

If you have thread models that the Chinese government might target you, assume that they know where your phone is and shut it of when going somewhere you...

This is a narrow objection to the IMO hyperbolic focus on government assault risks.

Whether or not you face government assault risks depends on what you do. Most people don't face government assault risks. Some people engage in work or activism that results in them having government assault risks.

The Chinese government has strategic goals and most people are unimportant to those. Some people however work on topics like AI policy in which the Chinese government has an interest.

From a Paul Christiano talk called "How Misalignment Could Lead to Takeover" (from February 2023):

Assume we're in a world where AI systems are broadly deployed, and the world has become increasingly complex, where humans know less and less about how things work.

A viable strategy for AI takeover is to wait until there is certainty of success. If a 'bad AI' is smart, it will realize it won't be successful if it tries to take over, not a problem.

So you lose when a takeover becomes possible, and some threshold of AIs behave badly. If all the smartest AIs...

Looking for blog platform/framework recommendations

I had a Wordpress blog, but I don't like wordpress and I want to move away from it.

Substack doesn't seem like a good option because I want high customizability and multilingual support (my Blog is going to be in English and Hebrew).

I would like something that I can use for free with my own domain (so not Wix).

The closest thing I found to what I'm looking for was MkDocs Material, but it's still geared too much towards documentation, and I don't like its blog functionality enough.

Other requirements: Da...

decision theory is no substitute for utility function

some people, upon learning about decision theories such as LDT and how it cooperates on problems such as the prisoner's dilemma, end up believing the following:

my utility function is about what i want for just me; but i'm altruistic (/egalitarian/cosmopolitan/pro-fairness/etc) because decision theory says i should cooperate with other agents. decision theoritic cooperation is the true name of altruism.

it's possible that this is true for some people, but in general i expect that to be a mistaken anal...

It certainly is possible! In more decision-theoritic terms, I'd describe this as "it sure would suck if agents in my reference class just optimized for their own happiness; it seems like the instrumental thing for agents in my reference class to do is maximize for everyone's happiness". Which is probly correct!

But as per my post, I'd describe this position as "not intrinsically altruistic" — you're optimizing for everyone's happiness because "it sure would sure if agents in my reference class didn't do that", not because you intrinsically value that everyone be happy, regardless of reasoning about agents and reference classes and veils of ignorance.

In my fantasies, if I ever were to get that god-like glimpse at how everything actually is, with all that is currently hidden unveiled, it would be something like the feeling you have when you get a joke, or see a "magic eye" illustration, or understand an illusionist's trick, or learn to juggle: what was formerly perplexing and incoherent becomes in a snap simple and integrated, and there's a relieving feeling of "ah, but of course."

But it lately occurs to me that the things I have wrong about the world are probably things I've grasped at exactly because ...

And then today I read this: “We yearn for the transcendent, for God, for something divine and good and pure, but in picturing the transcendent we transform it into idols which we then realize to be contingent particulars, just things among others here below. If we destroy these idols in order to reach something untainted and pure, what we really need, the thing itself, we render the Divine ineffable, and as such in peril of being judged non-existent. Then the sense of the Divine vanishes in the attempt to preserve it.” (Iris Murdoch, Metaphysics as a Guide to Morals)

Pain is the consequence of a perceived reduction in the probability that an agent will achieve its goals.

In biological organisms, physical pain [say, in response to limb being removed] is an evolutionary consequence of the fact that organisms with the capacity to feel physical pain avoided situations where their long-term goals [e.g. locomotion to a favourable position with the limb] which required the subsystem generating pain were harmed.

This definition applies equally to mental pain [say, the pain felt when being expelled from a group of allies] w...

In biological organisms, physical pain [say, in response to limb being removed] is an evolutionary consequence of the fact that organisms with the capacity to feel physical pain avoided situations where their long-term goals [e.g. locomotion to a favourable position with the limb] which required the subsystem generating pain were harmed.

How many organisms other than humans have "long term goals"? Doesn't that require a complex capacity for mental representation of possible future states?

Am I wrong in assuming that the capacity to experience "pain" is...

There have been multiple occasions where I've copy and pasted email threads into an LLM and asked it things like:

- What is X person saying

- What are the cruxes in this conversation?

- Summarise this conversation

- What are the key takeaways

- What views are being missed from this conversation

I really want an email plugin that basically brute forces rationality INTO email conversations.

That seems fair enough!

quick thoughts on LLM psychology

LLMs cannot be directly anthromorphized. Though something like “a program that continuously calls an LLM to generate a rolling chain of thought, dumps memory into a relational database, can call from a library of functions which includes dumping to recall from that database, receives inputs that are added to the LLM context” is much more agent-like.

Humans evolved feelings as signals of cost and benefit — because we can respond to those signals in our behaviour.

These feelings add up to a “utility function”, something ...

Hypothesis: there's a way of formalizing the notion of "empowerment" such that an AI with the goal of empowering humans would be corrigible.

This is not straightforward, because an AI that simply maximized human POWER (as defined by Turner et al.) wouldn't ever let the humans spend that power. Intuitively, though, there's a sense in which a human who can never spend their power doesn't actually have any power. Is there a way of formalizing that intuition?

The direction that seems most promising is in terms of counterfactuals (or, alternatively, Pearl's do-ca...

Such that you can technically do anything you want--you have maximal power/empowerment--but the super-majority of buttons and button combinations you are likely to push result in increasing the number of paperclips.

I think any model of a rational agent needs to incorporate the fact that they're not arbitrarily intelligent, otherwise none of their actions make sense. So I'm not too worried about this.

...If you make an empowerment calculus that works for humans who are atomic & ideal agents, it probably breaks once you get a superintelligence who can likely

Hypothesis, super weakly held and based on anecdote:

One big difference between US national security policy people and AI safety people is that the "grieving the possibility that we might all die" moment happened, on average, more years ago for the national security policy person than the AI safety person.

This is (even more weakly held) because the national security field has existed for longer, so many participants literally had the "oh, what happens if we get nuked by Russia" moment in their careers in the Literal 1980s...

What would the minimal digital representation of a human brain & by extension memories/personality look like?

I am not a subject matter expert. This is armchair speculation and conjecture, the actual reality of which I expect to be orders of magnitude more complicated than my ignorant model.

The minimal physical representation is obviously the brain itself, but to losslessly store every last bit of information —IE exact particle configurations— as accurately as it is possible to measure is both nigh-unto-impossible and likely unnecessary considering the ...

Yes, thanks!

I want a word that's like "capable" but clearly means the things you have the knowledge or skill to do. I'm clearly not capable of running a hundred miles an hour or catching a bullet in my bare hand. I'm not capable of bench pressing 200lbs either; that's pretty likely in the range of what I could do if I worked out and trained at it for a few years, but right this second I'm not in that kind of shape. In some senses, I'm capable of logging into someone else's LessWrong account- my fingers are physically capable of typing their password- but I don't have ...

Q. "Can you hold the door?" A. "Sure."

That's straightforward.

Q. "Can you play the violin at my wedding next year?" A. "Sure."

Colloquial language would imply not only am I willing and able to do this, I already know how to play the violin. Sometimes, what I want to answer is that I don't know how to play the violin, I'm willing to learn, but you should know I currently don't know.

Which I can say, it just takes more words.

A semi-formalization of shard theory. I think that there is a surprisingly deep link between "the AIs which can be manipulated using steering vectors" and "policies which are made of shards."[1] In particular, here is a candidate definition of a shard theoretic policy:

A policy has shards if it implements at least two "motivational circuits" (shards) which can independently activate (more precisely, the shard activation contexts are compositionally represented).

By this definition, humans have shards because they can want food at the same time as wantin...

I think this is also what I was confused about -- TurnTrout says that AIXI is not a shard-theoretic agent because it just has one utility function, but typically we imagine that the utility function itself decomposes into parts e.g. +10 utility for ice cream, +5 for cookies, etc. So the difference must not be about the decomposition into parts, but the possibility of independent activation? but what does that mean? Perhaps it means: The shards aren't always applied, but rather only in some circumstances does the circuitry fire at all, and there are circums...

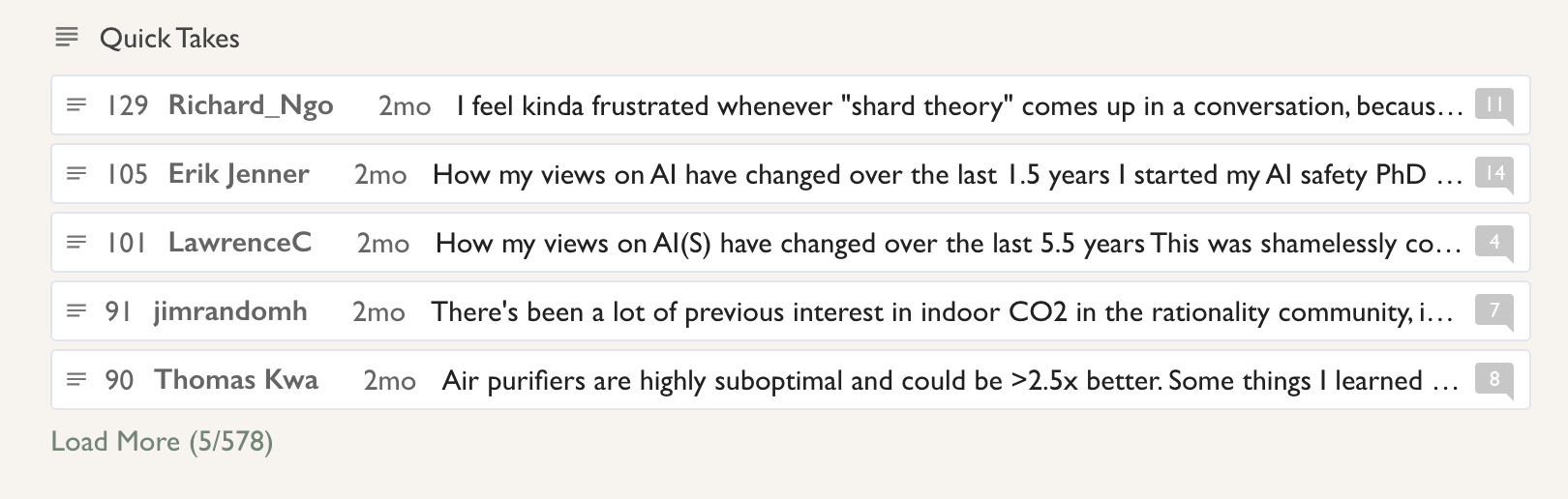

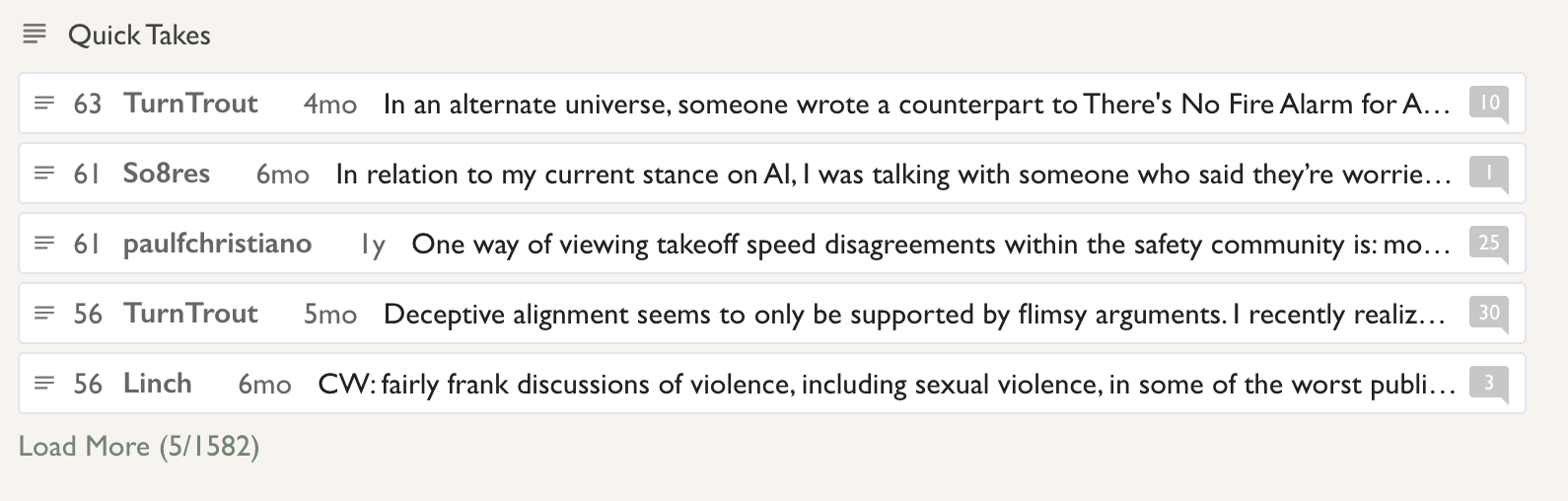

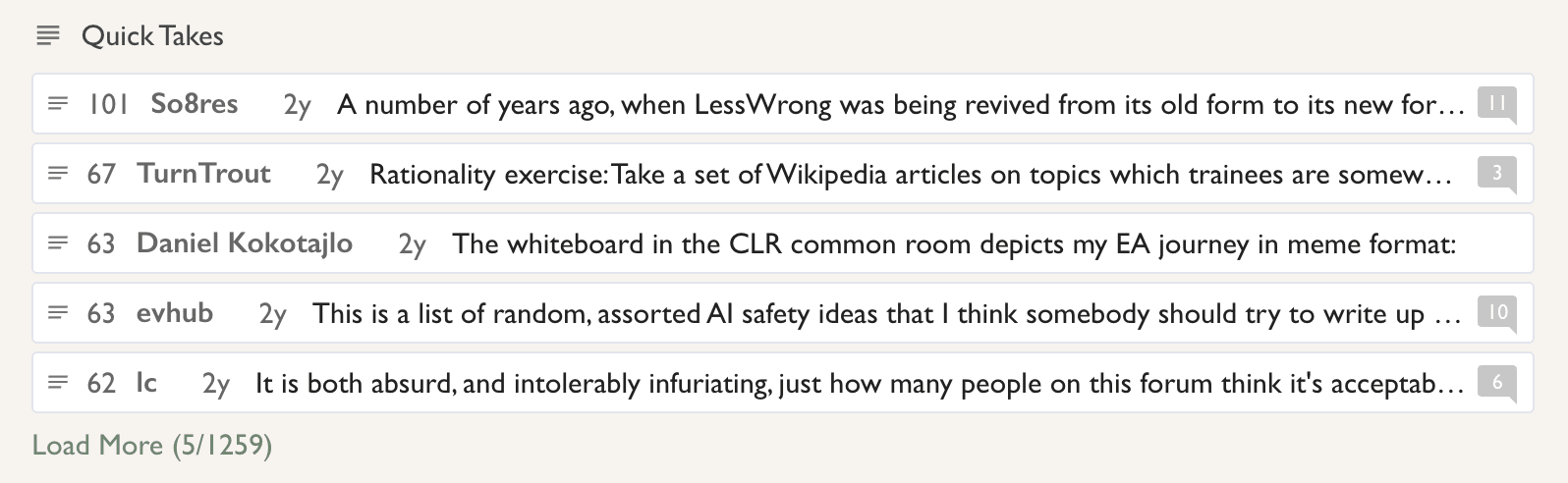

You can! Just go to the all-posts page, sort by year, and the highest-rated shortform posts for each year will be in the Quick Takes section:

2024:

2023:

2022:

Detangle Communicative Writing and Research

One reason why I never finish any blog post is probably because I'm just immediately starting to write it. I think it is better to first build a very good understanding of whatever I'm trying to understand. Only when I'm sure I have understood do I start to create a very narrowly scoped writeup?

Doing this has two advantages. First, it speeds up the research process, because writing down all your thoughts is slow.

Second, it speeds up the writing of the final document. You are not confused about the thing, and you ...

Be Confident in your Processes

I thought a lot about what kinds of things make sense for me to do to solve AI alignment. That did not make me confident that any particular narrow idea that I have will eventually lead to something important.

Rather, I'm confident that executing my research process will over time lead to something good. The research process is:

- Take some vague intuitions

- Iteratively unroll them into something concrete

- Update my models based on new observations I make during this overall process.

I think being confident, i.e. not feeling hop...

@jessicata once wrote "Everyone wants to be a physicalist but no one wants to define physics". I decided to check SEP article on physicalism and found that, yep, it doesn't have definition of physics:

... (read more)